Big Blue reveals IBM Vela AI supercomputer

In a blog post that was published earlier this week, IBM announced their cloud-native IBM Vela AI supercomputer. The system, which is located within IBM Cloud, has been operational since May of last year. Currently five of IBM Research’s AI researchers are the only ones who can use the system.

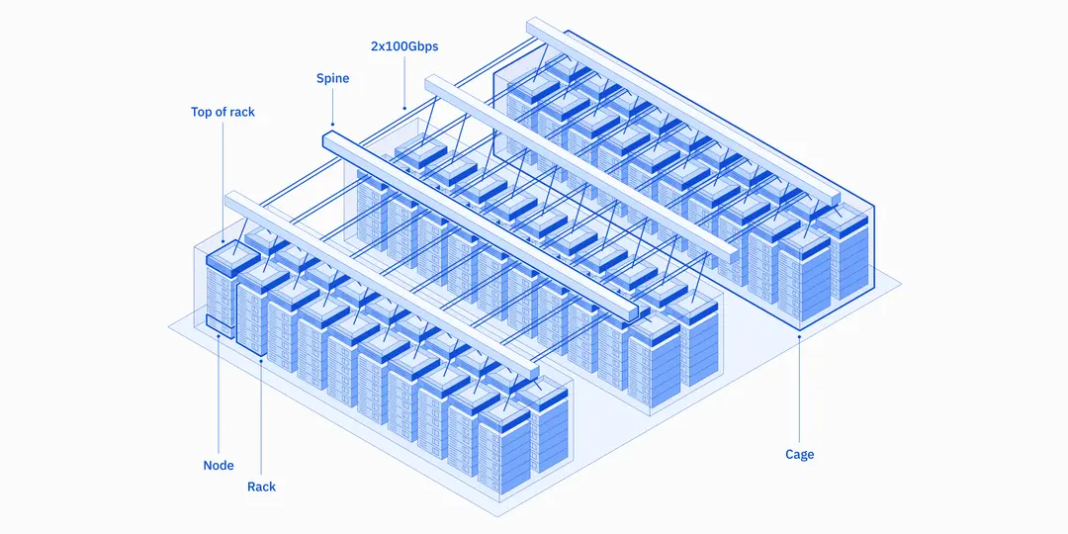

The company discussed the choices they made about the utilization of low-cost but powerful hardware in order to modify the IBM Vela AI Supercomputer model for this workload. According to IBM, “building a traditional supercomputer has meant bare metal nodes, high-performance networking hardware, parallel file systems, and other items usually associated with high-performance computing (HPC).”

“Vela is now our go-to environment for IBM Researchers creating our most advanced AI capabilities, including our work on foundation models, and is where we collaborate with partners to train models of many kinds,” stated IBM.

In order to give the researchers more flexibility and enable them to supply and re-supply the infrastructure with multiple software stacks, IBM indicated that they chose virtual machines over the conventional. They claimed to have developed a method of exposing all of the node’s resources (GPUs, CPUs, networking, and storage) into the virtual machine in order to reduce the virtualization cost to less than 5%. IBM claimed it to be “the lowest overhead in the industry”.

“We can efficiently use our GPUs in distributed training runs with efficiencies of up to 90 percent and beyond for models with 10+ billion parameters,” stated IBM. “Next, we’ll be rolling out an implementation of remote direct memory access (RDMA) over converged ethernet (RoCE) at scale and GPU Direct RDMA (GDR), to deliver the performance benefits of RDMA and GDR while minimizing adverse impact to other traffic. Our lab measurements indicate that this will cut latency in half.”

Each node on the IBM Vela AI Supercomputer contains eight 80GB A100 GPUs, each with 1.5TB of DRAM, four 3.2TB NVMe storage drives, and two 2nd Gen Intel Xeon Scalable processors (Cascade Lake). The nodes are networked through multiple 100G network interfaces.

Vela’s AI workload is able to use any of the 200-plus IBM Cloud services at hand, and while the work is done on the cloud, IBM has stated its architecture could also be adopted for AI system design on-premises.